Safer for kids

These are three features that can be found in different places in iOS, iPadOS and macOS. For example, there will be a new system to detect child abuse material, something we wrote about earlier today. At that time it was still just rumors, now Apple has revealed the full set of measures. They are all listed on this page.

They must prevent so-called CSAM (Child Sexual Abuse Material) from spreading. Children spend a lot of time online and run the risk of being exposed to unsuitable material. At the same time, children run the risk of being captured in shocking images themselves. All three features are optimized for privacy. This means that Apple can pass on information about criminal activities to the activities, but that the privacy of good citizens is not at stake.

Scanning iCloud Photos is the most important part. If CSAM is discovered, the relevant account will be flagged. Apple will also report it to a missing children's organization and cooperate with law enforcement agencies in the US. Apple emphasizes that for the time being it will only be used in the US. There may be a further rollout at a later date.

These are bad things. I don't particularly want to be on the side of child porn and I'm not a terrorist. But the problem is that encryption is a powerful tool that provides privacy, and you can’t really have strong privacy while also surveilling every image anyone sends.

— Matthew Green (@matthew_d_green) August 5, 2021

Apple does not scan the photos, but uses intelligence on the device to match the CSAM with a database of already known photos, which have been converted to unreadable hashes. This is a complicated process that uses cryptographic techniques to guarantee privacy.

A suspicious account will be blocked after a manual inspection. Users can appeal if they believe their account has been unjustly closed. It only applies to iCloud Photos and not to photos that are locally on the device.

An extensive technical explanation can be found here in PDF format.

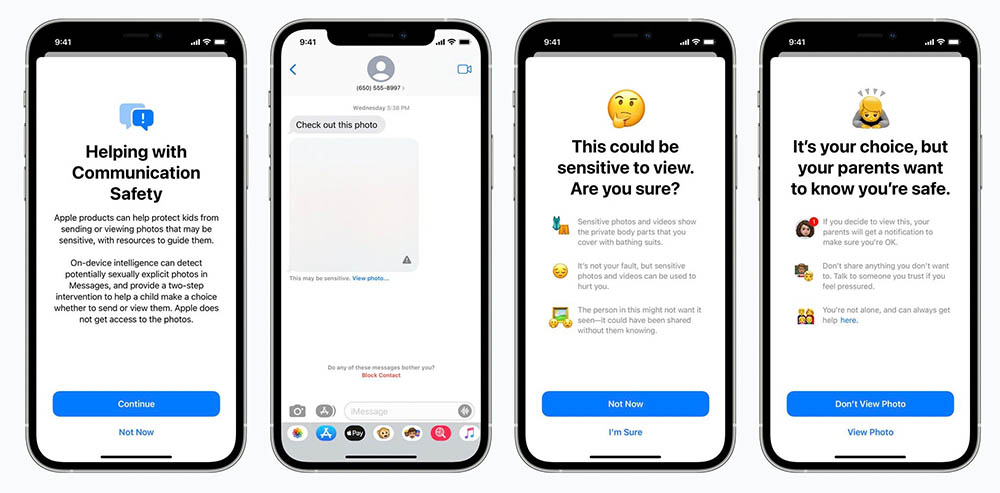

More security when using iMessage

Apple will also introduce new features to ensure parents are aware of how their children communicate online. If children receive a sexually explicit photo, it will automatically be blurred and they will receive a warning. If they still want to view the photo, the message will also be forwarded to the parents.

This also uses machine learning to analyze photos and determine whether they are explicit images. Apple will not receive a copy of the photo.

Siri and search help you further

Siri and search also become safer for users. For example, you can ask Siri for help if you are confronted with CSAM. Siri will then direct you to the correct sources. Apple emphasizes that not only privacy has been considered, but also possible abuse by governments in countries where it is less secure. Also, the encryption has not been weakened to enable these new tools. End-to-end encrypted photos cannot be analyzed.

Still, security researchers aren't completely reassured. Apple relies on a database of hashes that cannot be consulted. If an innocent photo appears to have the same hash as a well-known CSAM, then it will be difficult for you as a user to prove that they are two very different photos.