There is evidence of another variant of the H100 computing accelerator with a hopper GPU from Nvidia. This should offer 120 GB of the fast memory HBM2e instead of the previous 80 GB. For now, however, this remains just a rumor based on a photo from Device Manager.

For AI and deep learning tasks in data centers or supercomputers, Nvidia offers the current H100 series with graphics processors from the Hopper family with 80 GB of video memory. With 26 TFLOPS computing power (FP64) and 2 TB/s storage throughput, the version as an expansion card for the classic PCIe slot offers less performance than the SXM module (title picture) with 34 TFLOPS and 3.35 TB/s. However, the TDP of the SXM model is twice as high at up to 700 watts.

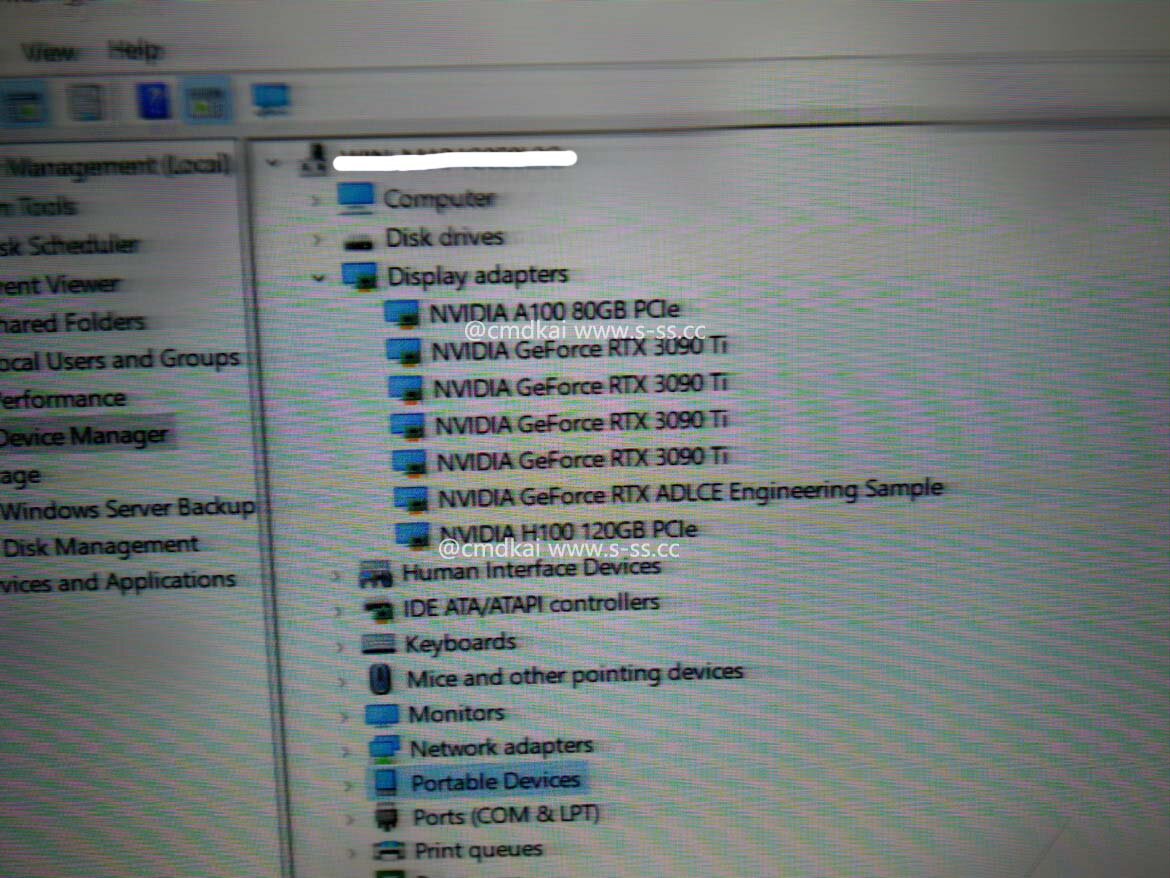

Screenshot shows the H100 with 120 GB

The alleged H100 with 120 GB shows a screenshot of unclear origin. According to the Windows Device Manager, the system has plenty of graphics cards. In addition to the well-known GeForce RTX 3090 Ti and A100 models, the aforementioned H100 with 120 GB and an “RTX ADLCE Engineering Sample” are listed. The latter is said to be a sample of the new GeForce RTX 4000 (Ada Lovelace).

Nvidia H100 120GB in Device Manager (Image: s-ss.cc (@cmdkai))

Nvidia H100 120GB in Device Manager (Image: s-ss.cc (@cmdkai))The user @cmdkai writes on his website that the H100 with 120 GB should use the full configuration of the GH100 GPU. Accordingly, apart from the memory, the PCIe plug-in card would (approximately) have the specifications of the SXM version. More pictures on his Twitter account indicate that he seems to be more involved with server hardware. This is by no means sufficient evidence, but it makes the “leak” appear more authentic.

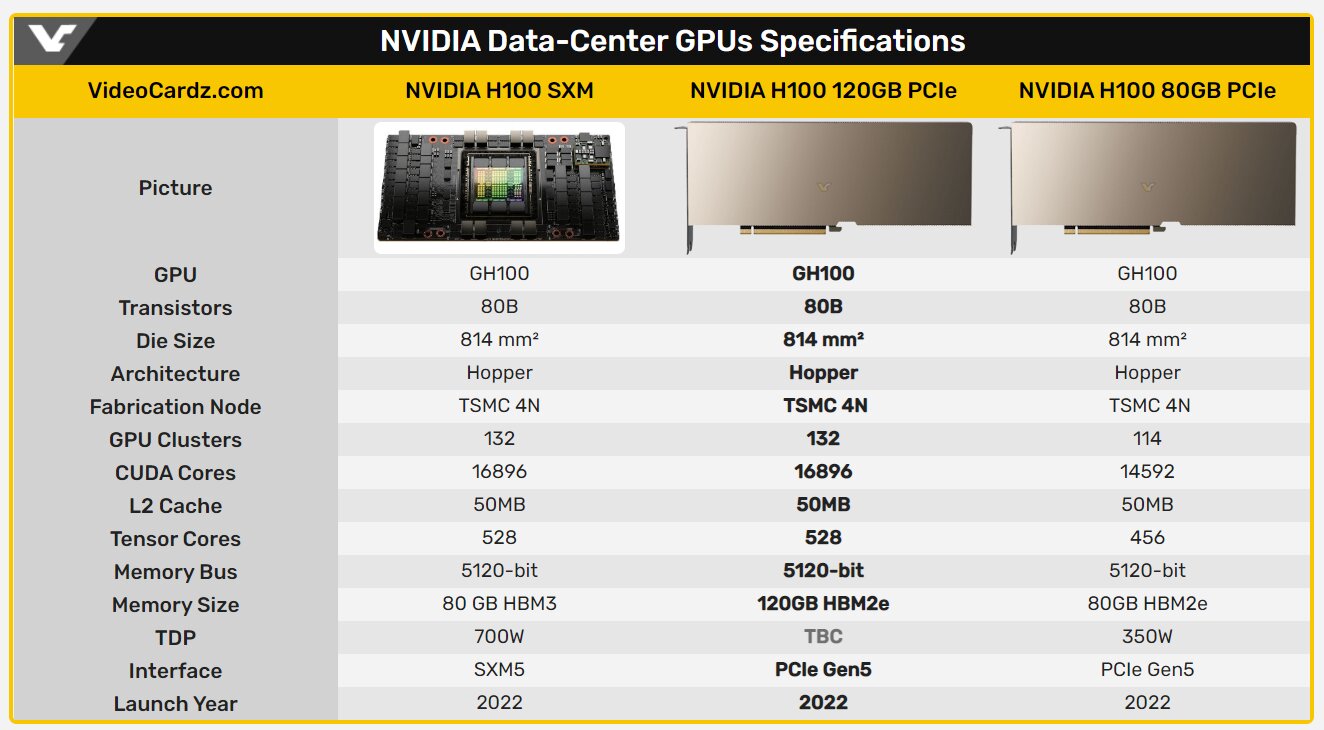

VideoCardz compared the (presumed) specifications of the three H100 versions:

Presumed key data of an Nvidia H100 with 120 GB (Picture: VideoCardz)

Presumed key data of an Nvidia H100 with 120 GB (Picture: VideoCardz)If you want to know details about Nvidia's Hopper architecture, you can find them in the article Nvidia Hopper: The new architecture for supercomputers by ComputerBase author is so fast Christopher Riedel.