Will Nvidia's math be successful with the new GeForce RTX 4000 series of graphics cards, named after British mathematician Augusta Ada King-Noel, Countess of Lovelace, commonly known as Ada Lovelace? How do ComputerBase readers rate the GeForce RTX 4090 and 4080 and DLSS 3.0?

Table of Contents

- Ada Lovelace soll be a big hit

- Too many downsides or a convincing release?

- Is 1,099 euros too much to get started?

- Are two GeForce RTX 4080 one too many?

- Will DLSS 3 be a game changer?

- Are you planning to buy a GeForce RTX 4000?

- Which GeForce RTX 4000 are you planning to buy? to buy?

- Participation is expressly desired

- All previous Sunday questions in the overview

Ada Lovelace should be a big hit

With the GeForce RTX 4090 and two variants of the GeForce RTX 4080, Nvidia has presented the first three graphics cards of the Ada Lovelace generation for gamers. Even if independent tests are still pending, the official manufacturer information alone is impressive and indicates massive increases in performance compared to the direct predecessors from the GeForce RTX 3000 (“Nvidia Ampere”) series.

Too many downsides or a convincing release?

But there are also some downsides: Entry into the new generation is only available from 1,099 euros and that with a graphics card called the GeForce RTX 4080 12GB, but according to many it is more of a GeForce RTX 4070.

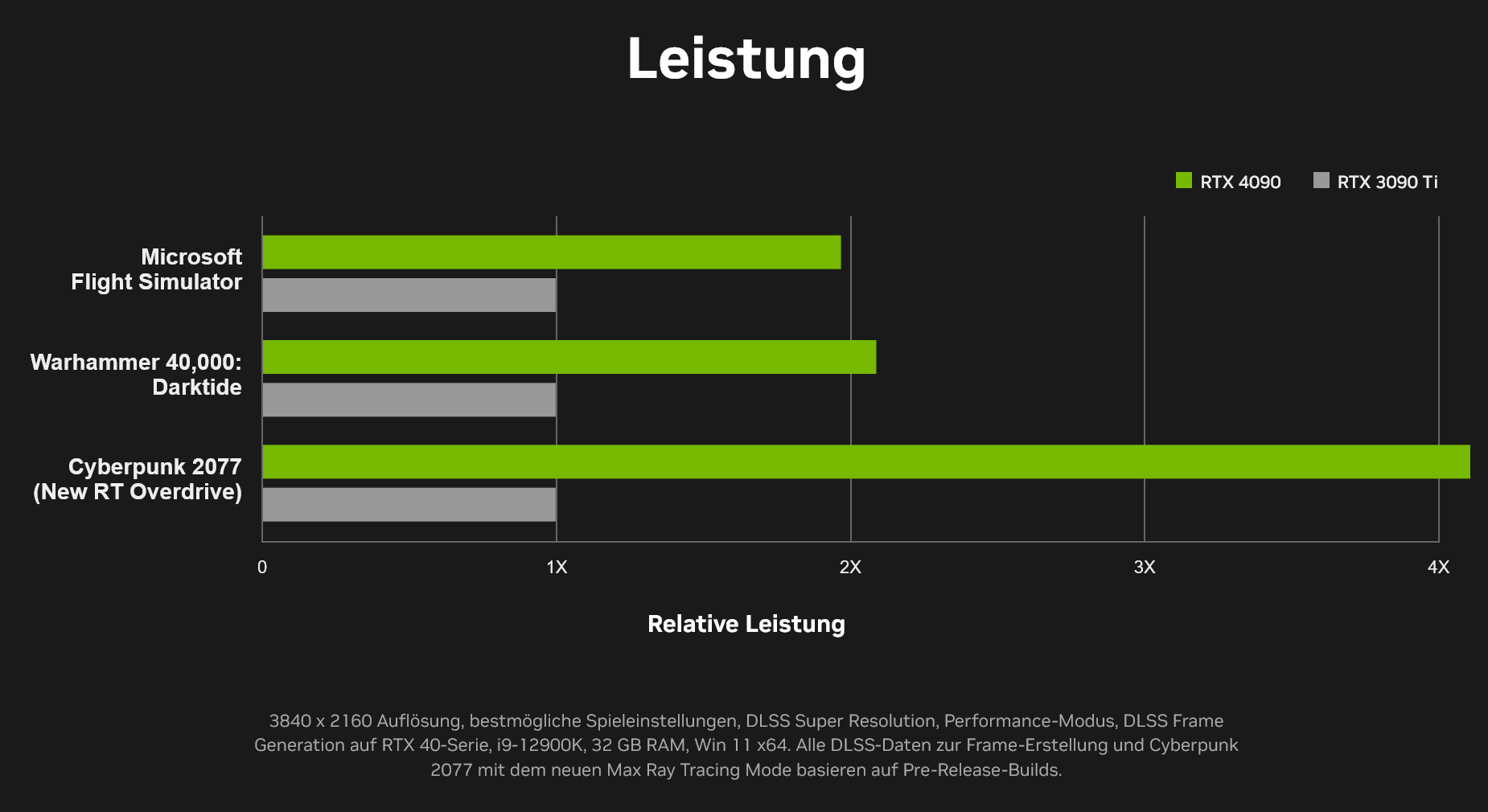

Nevertheless, Nvidia promises something big in terms of performance increases in direct comparison with the previous Ampere top model, the GeForce RTX 3090 Ti (test).

- 2x FPS on Microsoft Flight Simulator

- 2x FPS on Warhammer 40,000: Darktide

- 3×FPS at Portal with RTX (Mod)

- 4×FPS at Cyberpunk 2077

- 4 × FPS at Racer X

*) Manufacturer information (source)

However, the manufacturer benchmarks should be treated with caution for the time being and always refer to the relative performance in combination with Nvidia's AI upsampling DLSS, in this case DLSS 3.0, in performance mode.

Nvidia GeForce RTX 4090 vs. Nvidia GeForce RTX 3090 Ti (Picture: Nvidia)

Nvidia GeForce RTX 4090 vs. Nvidia GeForce RTX 3090 Ti (Picture: Nvidia)At the same time, the energy efficiency of Ada Lovelace should also be twice as high as with Ampere. The GPU developer did not give exact figures, but one graphic showed 450 watts and thus the speculated value for the Nvidia GeForce RTX 4090 FE, while custom designs can even have up to 660 watts, according to the latest rumours.

Was the release of the GeForce RTX 4000 convincing?

- Yes, the new architecture and the graphics cards based on it look very interesting

- No, I had promised myself a lot more from the release and I'm disappointed

- I have no idea, I haven't been able to get a particularly good picture of it yet

- I don't care, I'm waiting for independent tests and reviews anyway

- Abstain (Show result)

Please log in to vote!

Just a few days later, according to Nvidia, a commercially available GeForce RTX 4090 FE with a boost clock of up to 2.85 GHz was demonstrated to the press in the action role-playing game Cyberpunk 2077, which is compatible with DLSS 3.0 (Quality ) achieved up to 183 percent more FPS.

Is 1,099 euros too much to start with?

But whether Ada Lovelace keeps what Nvidia promises from the architecture must be shown by independent tests, such as that of the editors. But quite apart from that, do you think the 1,099 euros for entry into the GeForce RTX 40 Series, the official name of the latest graphics card family, is justified or significantly excessive?

Is 1,099 euros for entry into the GeForce RTX 4000 too much of?

- Yes, I think 1,099 euros is absolutely excessive for getting started

- No, I think 1,099 euros is completely justified for getting started

- Abstain (Show result)

Please log in to vote!

It was quite a small surprise that Nvidia is not planning to start with a GeForce RTX 4090, 4080 and 4070, but is said to have sorted the third model in the group “in a last-minute decision” as a GeForce RTX 4080 at short notice. The two GeForce RTX 4080 differ not only in the memory expansion, but also in the GPU.

The larger GeForce RTX 4080 with 16 GB of graphics memory is based on the AD103-300 with 76 compute units and a 256-bit wide memory interface, while the smaller GeForce RTX 4080 with 12 GB relies on the AD104-400 with 60 compute units and a mere 192 -Bit wide memory interface for GDDR6X.

The two GeForce RTX 4080 are also separated in price by a large gap, because while the 16 GB version costs 1,469 euros, the 12 GB model will change hands for 1,099 euros when it is released for sale, although the custom designs should once again be more expensive.

Are two GeForce RTX 4080 one too many?

Is the GeForce RTX 4080 12GB a legitimately named graphics card or actually a GeForce RTX 4070 at an artificially increased price point and only therefore assigned to the prestigious 80 series? Are two GeForce RTX 4080 twice as good or one too many?

How do you rate the division into two GeForce RTX 4080?

- I think the split into GeForce RTX 4080 16GB and 12GB is a good thing

- For me, the GeForce RTX 4080 12GB is actually a GeForce RTX 4070

- I don't care about the designations, what matters is the performance

- Abstain (Show result)

Please log in to vote!

Is DLSS 3 becoming a game changer?

In addition to the GeForce RTX 4090 and the two GeForce RTX 4080, Nvidia also presented the latest version of its AI upsampling technology. Nvidia DLSS 3 is said to deliver up to 4× more FPS than native, but a GeForce RTX 4000 is mandatory. DLSS 3 is a better combination of DLSS 1 and DLSS 2 (test) but do the up to four times the FPS values of the manufacturer benchmarks hide the actual much less impressive increase in performance?

Would DLSS 3 be a reason for you GeForce RTX 4000 to buy?

- Yes

- No

- Abstain (Show result )

Please log in to vote!

Are you planning to buy a GeForce RTX 4000?

Which Do you intend to buy GeForce RTX 4000?

Nvidia will be selling the GeForce RTX 4090 starting October 12 at an MSRP of €1,949, while the GeForce RTX 4080 16GB and GeForce RTX 4080 12GB will be available starting November for €1,469 and €1,099 respectively. Are you planning to buy a GeForce RTX 4000 and if so, which one should you choose?

Are you planning to buy a GeForce RTX 4000

- Yes, I will definitely buy a GeForce RTX 4000

- No, I'm definitely not going to buy a GeForce RTX 4000

- I'm not sure I'm going to buy a GeForce RTX 4000 yet

- Abstain (Show result)

Please log in to vote! Which GeForce RTX 4000 are you planning to buy?

- A GeForce RTX 4090 with 24GB GDDR6X

- A GeForce RTX 4080 with 16GB GDDR6X

- A GeForce RTX 4080 with 12GB GDDR6X

- Waiting for the smaller models

- I won't buy any

- Abstain (Show result)

Please log in to vote!

The final question is, do you think Ada Lovelace's plan will work for Nvidia? A significantly higher entry price, a very noticeably trimmed GeForce RTX 4080 12GB and a huge gap to the top model, can that work? Or will AMD come out on top with RDNA 3 and Navi 3x on the Radeon RX 7000?

Does Nvidia's calculation work?

- Yes, Nvidia will have significantly faster graphics cards in this generation

- No, Nvidia provides kick itself here and will be at a disadvantage against AMD and RDNA 3

- Abstain (Show result)

Please log in to vote!

Participation is expressly desired

The editors would appreciate well-founded and detailed reasons for your decisions in the comments on the current Sunday question. If you have completely different favorites, please write it in the comments.

Readers who have not yet participated in the last Sunday Questions are welcome to do so. In particular, there are still exciting discussions going on in the ComputerBase forum about the last surveys.

Overview of all previous Sunday questions

- EVGA × Nvidia = Divorce: How do you rate the separation of the two GPU specialists?

- From vacuum robot to household android?

- What has been your biggest overclocking success so far?

- Microsoft Xbox Series X or maybe the Sony PlayStation 5?

- You have Have you tried Linux and how was the experience?

- Which graphics card is the legend among legends?

- (How) are you trying to reduce your power consumption?

- Real system optimizers or just snake oil?

- Should ComputerBase offer merchandise again?

- The Sunday questions from the ComputerBase community

- FIFA or Pro Evolution? Street Fighter or Tekken?

- Which game console do you remember best?

- What do you think about crypto, tokens and blockchain?

- JEDEC, XMP profile or do you prefer real overclocking?

- Which manufacturer offers good and which bad tools?

- How do you see the current development of games?

- How and when was it your first time on the internet?

- Do you optimize your gaming PCs with the help of OC and UV?

- VR gaming was, is and will always be something for the niche, right?

- What is the name of your RGB boss?

- Who else do Napster, KaZaA, eDonkey and eMule say?

- Have you ever owned a graphics card with a dual GPU?

- What Sunday question would you ask yourself wish?

- Is open source an alternative for you?

- Windows Defender offers sufficient protection, doesn't it?

- Which architecture was more groundbreaking: Zen or Core?

- PlayStation, Xbox or Nintendo?

- The future are games from the cloud! Or not?

- Windows 11 or Windows 10 and why?

- Can Intel Arc already compete with AMD and Nvidia?

- Ryzen 7000 or 13. Gen Core, who's going to win?

- Will Valve make Linux a breakthrough in gaming in 2022?

- Where are we headed in terms of power consumption?

- One week Log4Shell, what did that mean for you?

- AMD Radeon or Nvidia GeForce for the next-gen?

- ARM or x86, who owns the future of servers and PCs?

< /ul>

You have ideas for an interesting Sunday question? The editors are always happy to receive suggestions and submissions.

Was this article interesting, helpful or both? The editors are happy about any support from ComputerBase Pro and disabled ad blockers. More about ads on ComputerBase.