In recent weeks, there has been a constant stream of criticism of Apple's plans to scan users' photos for child abuse. It is part of a larger set of measures from Apple to combat the spread of child abuse. Several organizations have already criticized individually, including experts from the Center for Democracy & Technology. This same organization has now prepared an open letter, along with more than 90 other organizations worldwide. The gist of the story is that they hope Apple will abandon its plans.

Open letter to Apple: “Stop plans to scan photos”

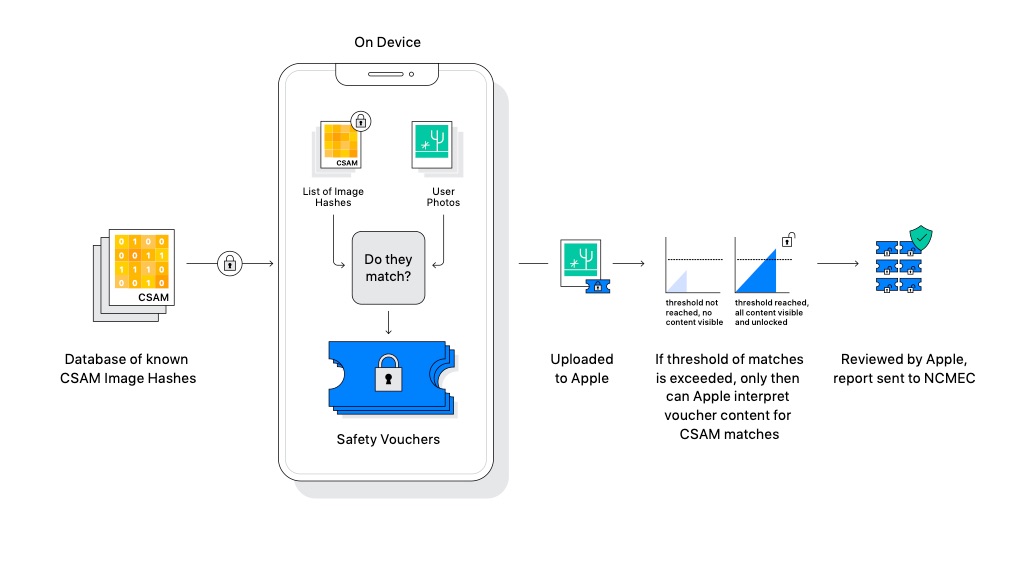

The letter focuses on two points: scanning potentially harmful photos into iMessage and scanning the photos to detect CSAM (Child Sexual Abuse Material). While the features are currently only planned for the US, organizations from all over the world have joined the open letter. Global Voices has joined on behalf of the Netherlands. This organization reports on human rights and citizen media worldwide.

The organizations fear that enabling CSAM detection in iCloud Photos sets a precedent for other types of surveillance. According to the open letter, Apple could be under great pressure from countries, governments and regulations to allow other forms of scans. Apple has previously stated in its own FAQ that the feature is solely for the purpose of detecting child abuse and other requests will not be honored. But the organizations in the open letter don't think Apple can provide that guarantee. Apple is laying the foundation for further scrutiny and censorship, or so the reasoning.

In addition, the organizations think that the warning function in iMessage puts children at risk. In particular, LGBTQ+ young people with elderly people who are not tolerant could be at risk. Parents must enable this feature themselves. Moreover, the system assumes that parents and the child have a good relationship. That is not always the case in practice, the letter states. This can lead to more imbalances and dangers for the child.

The full letter from the organizations can be read here. It remains to be seen whether Apple will do anything with the criticism of the plans. In recent weeks, Apple has tried to explain in interviews how the system works and that it cannot be abused. But with so much criticism from various organizations, Apple can hardly help but react and take action.

More about Apple's measures

Even earlier this week, Apple's own employees have criticized the scanning of photos for child abuse, as it turned out earlier this week. on CSAM detection and photo scanning to learn more about how the system works.