HBM3 was on the Rambus roadmaps for years, but recently disappeared. Now it is being rediscovered thanks to competitors, because SK Hynix recently went on the media offensive and showed the first possible technical data. Rambus wants to surpass this by far.

Long discussed and not yet finished

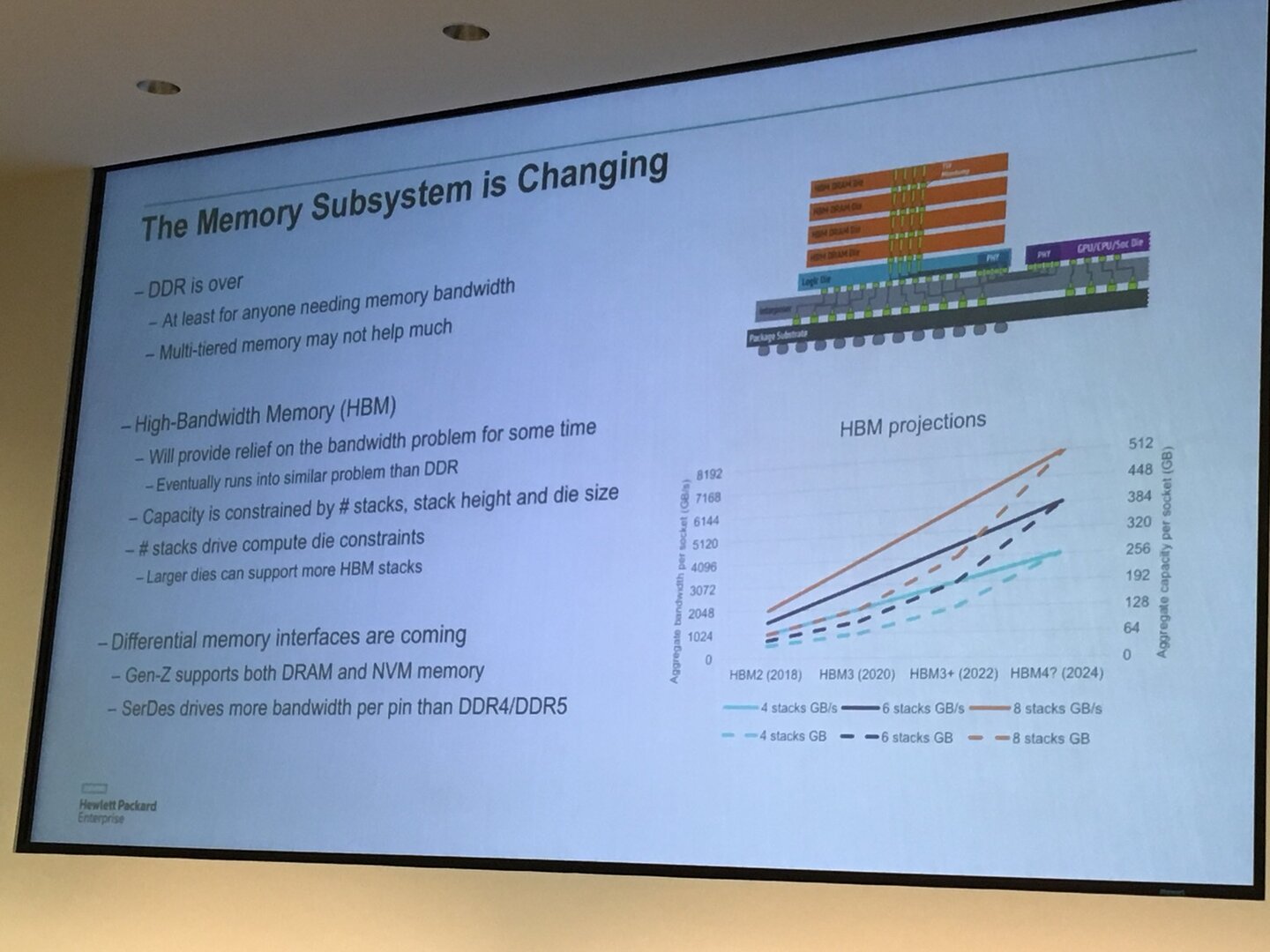

Five years ago, ComputerBase took part in an event organized by SK Hynix and Samsung as part of Hot Chips 28, which dealt with HBM3, among other things. At the time, HBM3 was talking about 512 GB/s bandwidth, which was a big number five years ago. In five years, however, a lot has happened. HBM2 did not go straight to HBM3, HBM2e took over as an intermediate step. And of the roadmaps from 2018, which HBM3 saw in 2020, more than one image did not remain.

HBM3 should come in 2020 (Image: Twitter)

HBM3 should come in 2020 (Image: Twitter) SK Hynix recently stated 665 GB/s bandwidth “and more” for the entry of the new HBM3, which means that the clock rate would be 5.2 Gbit/s. Rambus wants to surpass that and deliver 1.075 TByte per second of bandwidth, that is 8.4 Gbit/s of clock speed. On paper that would be more than a doubling of the current HBM2e, which is rated at a maximum of 460 GB/s. Rambus explains that it can also go down with its support for clock rates of 4.8, 5.6 and 6.4 Gbit/s.

The problem with this is that Rambus only provides certain controllers and functions and does not ultimately develop the memory itself. That still remains the task of the DRAM producers, an HBM3 chip directly with the maximum specification is therefore initially unlikely, which is why the approach of SK Hynix probably corresponds more to reality in the first few years.

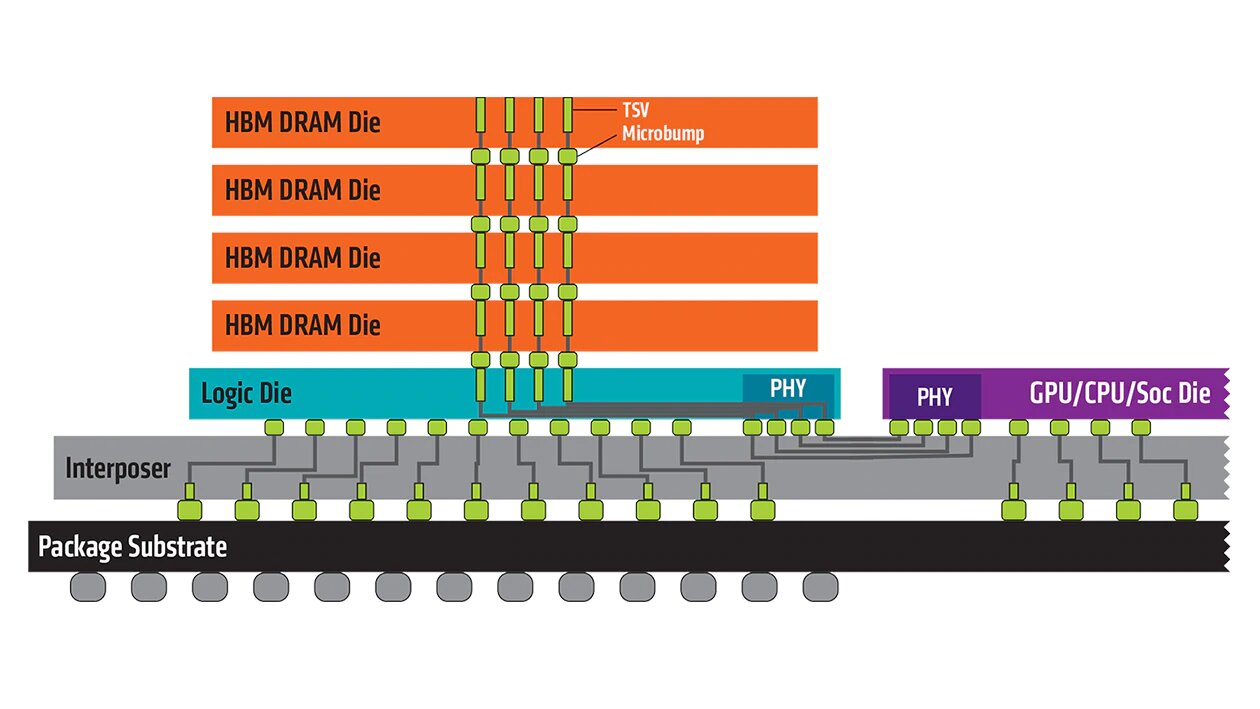

HBM under construction (Image: AMD)

HBM under construction (Image: AMD) Up to 64 GB per stack

The structure of the HMB3 will not change compared to the previous HBM2 and HBM. Rambus continues to speak of the use of a 2.5D interposer, the actual memory chips are stacked together with Phys on an interposer. It is not yet known how high the storage capacity will be. HBM2e currently achieves up to 24 GB with a 12-fold stack of memory chips, with HBM3, as confirmed by Rambus today, 16-fold stacks are used for the first time, so that at least 32 GB would be available directly. In the long term, 64 GB is the goal.

When that will happen is also still in the stars, a first solution may be seen in 2022, but if the past has taught one thing: At HBM it always takes a little longer. 2023 or 2024 should be far more realistic.

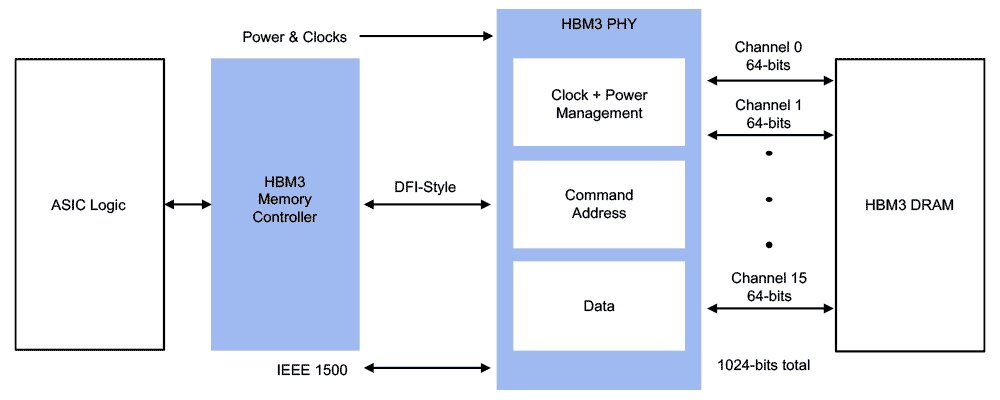

HBM3 memory subsystem (Image: Rambus)

HBM3 memory subsystem (Image: Rambus)